This is a story about a job long, long ago.

In having gone through this, I developed a system of heuristic flags to look at an organization that you work for. While these are context sensitive, I tried to keep a little of the context in the flags to give the reader some idea of what I saw. Seeing these things as they happen is extremely difficult. When you are in the moment they can look like a compromise, yet remove yourself from the situation for 2-3 months and suddenly they become “OH MY GOD, I can’t believe they even asked us to do that!”

The reason that some of us persevered through lots and lots of these yellow and red flags is many. People don't like change, interviewing is change. People like to see things through to the end, quitting feels like you failed. I genuinely liked the people I worked with, or at least lots of them, but that doesn’t change the culture of the sociopaths in charge (Gervais Principle). Hindsight is 20/20, looking back, I should have quit way earlier, but recognizing the signs in real time is much more difficult then it would seem when writing this down years later.

(the stuff in italics are the warning signs)

It all started slowly. That’s the best way to disguise failure. It’s how we can convince ourselves, “we're just learning"…

I had been working for this company about three months when it was decided that we needed to go Agile. We hired 2 product owners did a couple of days of training. Made a backlog, estimated, planned a sprint and brought new team members on board. We didn’t have a scrum master but we ‘were’ looking for one. Can’t have everything at once we told ourselves.

Yellow Flag 1: Starting a process by ignoring pieces of the process before trying them is folly.

We start this grand adventure with a meeting where it was deemed, the biggest oldest crappiest hard-to-read, difficult-to-modify code would be the starting point for the new hotness. Not lets rewrite it, but take the ‘scroll of insanity’ as it was called, and use it to build on for the new hotness. But we sallied-forth, new process, one bad decision won’t kill us, and we plodded along for a couple of months.

Then the QA manager had a life event and never recovered, he left the company and the rest of this story is sans QA having a voice. We told ourselves we can work without a manager ‘for the team’.

Yellow Flag 2: Working without a direct supervisor for more then 3 months.

Don't get me wrong I don't want to work for just any manager, and neither should you. But if a decent (notice NOT GOOD) manager doesn't show up within 3 months, I can think of 2 things. The company doesn't attract quality people. Or they aren't making it their highest priority.

Soon our VP was walking through the building explaining to other people / board members the agile process in exquisite detail. Basically lying to their face, in front of ours.

Red Flag 1: When management tells everyone including you, we are Agile. Then proceeds to prevent you from being a self-directed team, doing backlog estimations, working on a single sprint at a time or defining done. Now that I see this, I wonder if it was a sadistic way of daring us to challenge him.

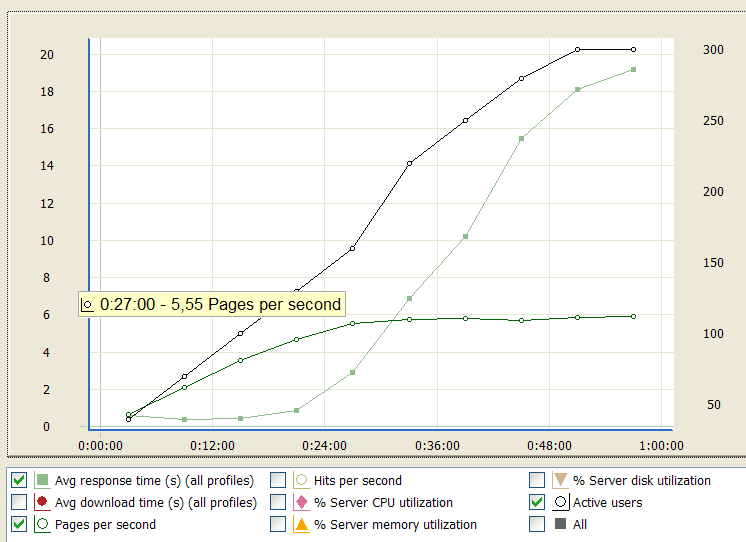

At this point we had a new development architect team. They had a working pre-alpha. As a concurrent B2B web application the VP decides we should load test this. I can handle a new challenge and was excited to get started.

First round of testing showed two concurrent users crash the new hotness. Spent a week tweaking on load testing cause clearly I’m missing something with the load test. After showing the development manager and VP the results. I was called a liar, and the load part of testing was given to someone else. Two weeks later he has the same results I do, and the development manager admits maybe there is an issue in our session management.

Meanwhile I'm off to functional automation. Automation is brittle and the 3rd party GUI libraries aren't helping. No hooks, dynamic id’s, I can’t work with this. I of course had no manager to explain this to, but was informed, “It helps the developers move faster.” Which just puts QA, without a voice, further behind…

At this point the only thing keeping me at the company is the Warhammer Fantasy board between a developer and myself.

A co-worker and I start to share any job openings we find in an effort to get out, but due to the recession, there isn’t much.

As all this is happening, we move to production with two active sprints. In and of itself wasn’t insane until you note that shortly we had 3 active sprints in production with only two sprint teams. At one point we had three active sprints in production being worked on with bugs, an active sprint in stage, one in QA and a sixth in development.

Yellow Flag 3: Is having multiple sprints active per sprint team.

Red Flag 2: Is having six different sprints being actively worked on, by two sprint teams.

We had a sprint board which was viewed as sacred ground, only the PO could touch, move, write, etc. on or with it. At one point one of the testers called the board the joke that it was, and said that without the ability to move, edit or touch things, it was stupid. He said this to the POs face. Within 10 minutes he was in the VP’s office being told to apologize or he was fired.

Red Flag 3: Anyone who criticizes the process, being threatened with their job.

So, we were fast approaching an imaginary deadline of an alpha version of our software, and we were behind, working OT and generally trying to get 'er done. This particular company prided itself on the 2 weeks over christmas only requiring a skeleton crew, so most of us didn’t mind the OT, in a couple of weeks most of us would get a respite and then come back refreshed. When the VP came out and announced, “ very one will be working over christmas, no PTO except on the holidays themselves, and mandatory 50 hours every other week“, yes they had canceled christmas. For a date that only they believed in, never mind that most people were doing 60-80’s.

Yellow Flag 4:(approaching red) Is when management changes work schedules for you.

Projects dead. QA ignored. Automation maintenance nightmare. VP unwilling to compromise. 5 active sprints.

The day I gave my two week notice I had to write a letter to quit, cause I couldn’t find the VP (who was my manager) for the entire day. He never did show up.

So he politely asked me to not inform people of my two week deadline, he’d like to handle it. Of course I was more them willing to allow him to handle it in his own way. A week rolled by, with no announcement, so I was unable to tell anyone. As a result no one thought it important to learn and take over the tasks that I was doing. Week two started with a scum where people started to assign me work, and at this point I was tired of waiting and kinda upset. I told the entire team that most of that work needed to be someone else’s cause Friday was my last day. Everyone was upset, and yet officially my leaving still wasn’t announced till one day before my last.

To top off a wonderful last week of trying hard to make sure people are prepped for me being gone. My exit interview consisted of HR telling me over the phone, “No, that’s not why you’re leaving.” What do you say to that?

Red Flag 4: The process of handing off your knowledge to someone is stymied, and no one in management cares why you are leaving. (Albeit this flag is more for people still there.)

Monday, November 17, 2014

Introspection on why I left...

Monday, November 10, 2014

James Bach and Dr. James Whittaker: An attempt to understand viewpoints

Introduction, Intentions and Limits

I don't have the political ties to many of the big thinkers of the industry. I have seen James Bach speak, had him address my blog, addressed his blog, read his book and I have even asked him a question at CAST, but I don't know him personally. I have attempted to address Dr. Whittaker in his blog, but have never got a response and I have never meet him. I have read Dr. Whittaker's (in)famous exploratory testing book, which is currently the second most popular posts I have written. I take that to mean I am not the only one who has interest in Whittaker's views and I suspect I'm not the only one to never have met him. In my consideration of both these individuals, I am not attempting to make it personal. As you read this, please keep in mind that this is my attempt to understand their viewpoints. This is an attempt to parse the words of each of them to gain insights.

I came from CAST a few months ago and while I'm not going to directly talk about CAST I want to talk about a subject near and dear to my heart. In listening to Jame Bach's talk, most of which was around the process of testing, but one piece was clearly controversial. This was the Testing vs Checking and the ideas around it. In talking with Bach, I now know I hold disagreements with some of his model. I mostly kept silent regarding this, except for my consideration of Heuristics and Algorithms where I pondered if what humans and computers do is equal, it just happens human are complex and unknown currently compared to that of a computer. This leads down the free will question that is unanswerable, which is why I didn't deep dive into that subject. I still am resisting that particular topic for now, but I may return to it on a later date.

Bach has interesting ways of subdividing his world, with the terms Testing and Checking, with the word Test meaning something different in the past, even for Bach. Some day I will do a word of the week on Test, but that isn't today's subject either. In fact I'm still dodging giving my detailed, full opinion on checking vs testing, but be assured it will come up. Today's topic actually is about my studies of James Whittaker and what appears in my view as James Bach's answer to that viewpoint. I choose these two in part because I was interested in why Whittaker left testing and for Bach's part, he is a prolific exemplar of a testing professional for me to consider. Bach also has address some areas that Whittaker has talked about, making him a easy to cite exemplar as well. This is not meant to be name calling, but rather I am trying to describe how I see these thinkers in test hold positions and viewpoints that are deeply held by many individuals. Certainly other people have variations of these viewpoints and certainly I maybe miss-reading parts of these viewpoints. I struggle not to put words in people's mouths, but I have to admit some of this is speculation based upon the comments I've found that Whittaker has made between 2008ish and 2014. Those are limited and I have studied them over months, so my sourcing is limited. James Bach, on the other hand, is prolific, which means I might be missing data. With that said, on with the show!

Warning: This is a long winded personal discovery post, you have been warned.

Warning: This is a long winded personal discovery post, you have been warned.

What Dr. Whittaker has said on Testing

In .NET Rocks 408 from 2009, Whittaker complained about only having guiding practices, with no solid knowledge, saying,

“I'll just be happy when testing works... because they have guiding practices, guiding theory....”Later he noted how more testers means, in his words, lazy developers:

“We claim in many groups a 1 : 1 dev/testing ratio [in Microsoft]... Google [has]... 1:10 tester ratio... 1:1, you think oh wow, that is a lot of testing, also a lot of lazy developers to be perfectly honest... At Google they have no choice but to be a little more careful in development.”

Whittaker wants to remove as much inventive thinking from the process as possible, saying things like “In Visual Studio 2010... much of that [change analysis] we will automate completely..." and

“What can you tell me about the new tools... we think you will be able to reproduce every bug.” That is a fairly wide and amazing claim. He even starts talking about intelligent code that will find bugs all by itself, “Only if it is the right sort of unit testing. If it isn't finding actionable bugs... throw it out. If the test cases themselves were feature aware, they know, they know what bugs they are good at finding... this is the future I want.” Why do you need to inject intelligent reasoning when all problems have been solved before, but are hard-coded to one particular program? “I'm convinced, on this planet, everything that can be tested, has been tested.” In fact, why not remove testing as an activity all together? Just have the machine figure out what needs to be tested, even before you hit compile! “We[test] need to be invited in to be a full fledged member... we have to get to the point where we [test] are contributing more. Can we get software testing to be more like spell check is in word.... this needs to be the focus of software testers. These late cycle heroics and these testers who are married to late cycle heroes are Christmas Tester Past. ”

Dr. Whittaker imagines a future where testers are more-or-less developers. They might have a few test skills but basically once the magic tools exist, then developers should be solving these problems. But of course he might have changed his mind since then? I mean that was in 2009! What about modern times?

Quality is no less important, of course, but achieving it requires a different focus than in the past. Hiring a bunch of testers will ensure that you need those testers. Testers are a crutch. A self-fulfilling prophesy. The more you hire the more you will need. Testing is much less an actual role now as it is an activity that has blended into the other activities developers perform every day. You can continue to test like its 1999, but why would you? - March 25, 2014, http://www.stickyminds.com/interview/leadership-and-career-success-purpose-interview-james-whittakerNope, Dr. Whittaker's viewpoint has gotten even more extreme. Dr. Whittaker might say I am a developer given the 'advanced' automation I write, but he certainly thinks most testers should just go away. He would rather have testers all fired, because [manual?] testers just enable developers to write bad code. Why does he feel this way? Well first of all, he's in a super-large company that hires a great deal of the best talent there is. Microsoft has some amazing developers and clearly they can write some amazing code, so maybe he sees them and assumes everyone else is like that. Before he was at Microsoft, he worked for Google, so his perspective might be skewed. Perhaps Microsoft and Google can get away with fewer or no testers. Facebook seems to do it. He certainly seems to hint at it with his most recent blog post on the subject. His infamous 'traditional test is dead' talk also seems to indicate that you need enough people to dog food your product, which requires a large community of people willing to file bugs for you. That does not apply to everyone, but he might not realize that? Dr. Whittaker's history has, as best I can tell, been mostly about bigger companies and teaching. Perhaps there are other reasons I have not been able to discover. I'd love to hear from Dr. Whittaker! Clearly James Bach, has a reply...

James Bach and Automation

James Bach, a man who consults with and trains hundreds (or more?) of testers each year also has an interesting vantage point. He mostly works on testing from an intelligent gathering of information through investigation. He argues, in essence that any investigation made by a programmer in order to write automated tests (i.e. "checks") or executing the automated tests is in fact not equal to that of a human being. All of Dr. Whittaker's magic tools make less sense in the mind of Bach. Not that Bach doesn't want these tools, but he would argue without an intelligent human, the tool provides no additional value. He has gone so far as to start labeling automated testing as checking. Not saying they have no value, but saying that their scope is limited compared to a human. I think Bach would even argue an AI-oriented test method, load testing, model driven testing and other "beyond human" testing could be done by a tester, given infinite amounts of time and/or humans. To be clear, James Bach seems to not view it as possible that there are tests that are beyond human, but I will address this later. Therefore, I could simplify the argument to this:

if(Human.Testing > Computer.Testing) then throw new DoNotOverloadWord("Computer Testing and Human Testing are not the same!");

Ironically, Dr. Whittaker might agree with that simplification if only you change the ">" to "<". While I might agree with James Bach in his basic point, I think that there are a few concerns. One is that trying to change a word used in many different cultures, not just software testing to something new just makes another standard. He's just added another definition to an already complicated word, making it even harder to understand. Worse yet, it seems like in defining the word that way, Bach has accidentally made his own standard for the word test, which will compete with other standards. I don't know if Bach wants to be a sort of defacto-ISO standard or not, but that's how it might become. I certainly sometimes see how his passionate arguments could be perceived as an attempt to be sort of standard. On the other hand, I also hate the answer 'it depends on context' (without something to back it up), so at least when I read Bach's blogs, I know what he means when he says checking. Dr. Whittaker doesn't seem to put in nearly as much rigor in his words, making his ideas a little more slippery. I don't know that there is an ideal way of handling this problem. Perhaps that is part of why schools of testing showed up, so you know whose standard definitions you use.

|

| When you say "Test" which definition are you using? The Bachian 2014 definition. Oh, you've not moved on to the 2015 definition? No, it's out now? |

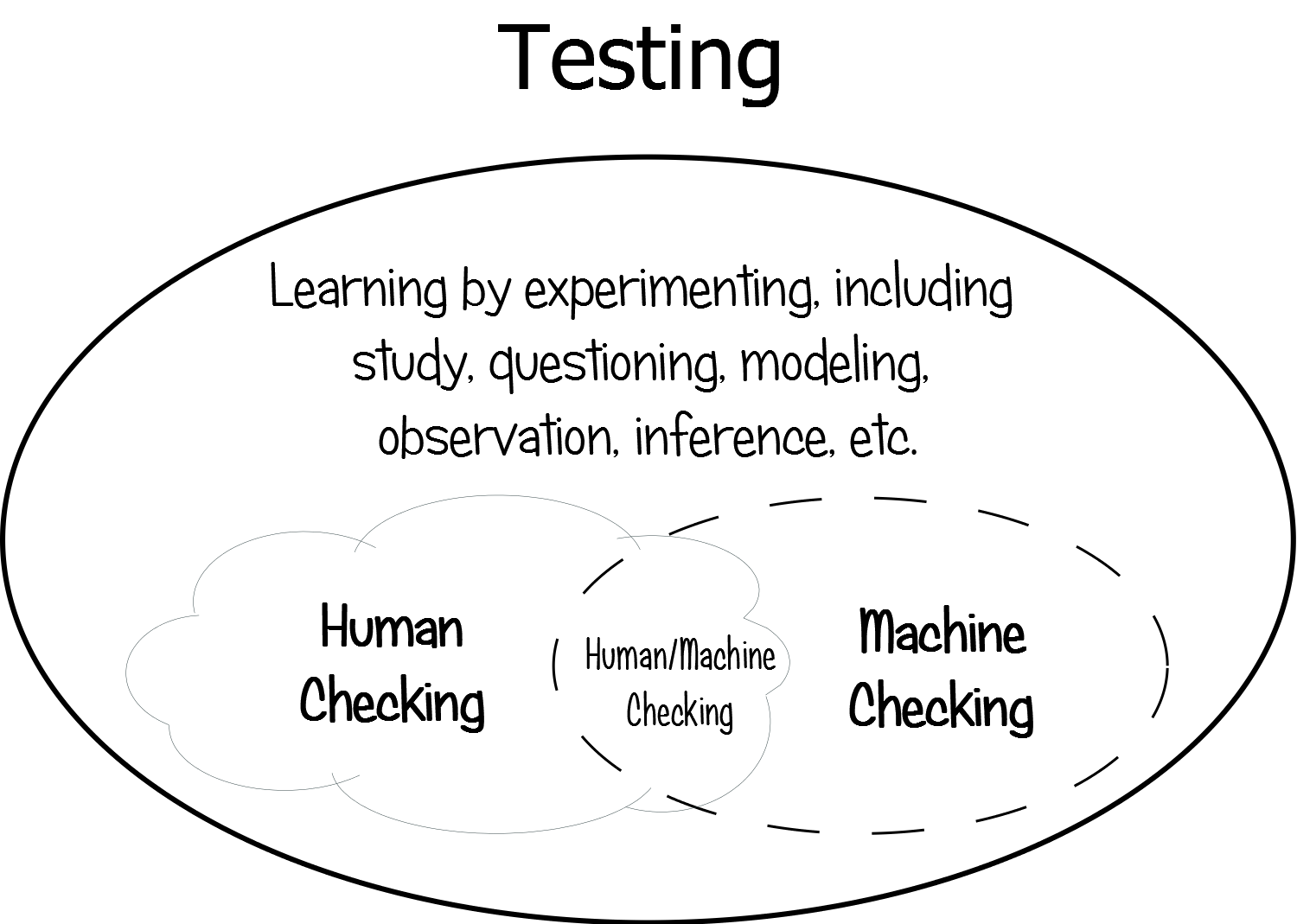

Back to the question of computers and if they can go beyond humans in testing/checking. I take it that Bach feels that computer's ability to test/check cannot go beyond people from this graphic:

Machine Checking is inside Testing and James Bach defines Testing as:

Testing is the process of evaluating a product by learning about it through exploration and experimentation, which includes to some degree: questioning, study, modeling, observation, inference, etc.So if you run a load test, the load that the machine generates allows you to observe the system under load, using machine generated metrics like page load times or database CPU usage. The question is where does the load test code belong? Is it 'checking' in that it navigates the pages? I suppose it does blow up if it can't load the page, which is a check, but the primary point of load tests is not about the functions of the page as much as the non-functional requirements. Is the data gathered by the load test a model? If I have a graph of users to page load times, isn't that a model... created by a machine? Bach does not seem to have a reply to this, other than to say that a machine embodies its script and thus is separate from humans. Whittaker on the other hand does not care as much about the distinction as he does about getting the technology good enough to get the data into engineers hands without a middleman tester writing a specialized load test script. Better yet, in Whittaker's mind, get your users to do the testing by deploying the code.

Another issue is that while the test executes, it is limited to the data and code written, even if you have a genetic algorithm for testing and use Google as a datasource. These are powerful algorithms and Google as a datasource outmatches my own knowledge base in a sense. While I agree this is perhaps different than a human who is perhaps in some way more advanced than said algorithms, it can also be beyond human. Perhaps because humans can reason and the algorithms are limited to the code written there is a distinction to be made, which is fair enough, even if I still am not 100% convinced. Is reasoning just another term for 'a process we don't fully understand' while code is something we can? Is 'tacit knowledge' and 'cultural understand' really limited to just humans or will computers with enough data be able to generate similar models some day? I admit human brains seem different, maybe we have a soul, maybe we have free will, maybe.... But to be fair, even with my imagined super-advanced testing system, I know 98% of automated testing systems are not attempting to approach these sorts of advancements. Even if our automation systems aren't nearly as good as I think they can be, does that mean that testing vs checking will always be a dichotomy or will programs eventually get good enough that we can't tell the difference? And what if humans are always special and better, what about diminishing returns? Hand-washed clothing maybe cleaner and possibly more water efficient, but a washing machine is preferred by nearly everyone in the modern world. Perhaps all of this doesn't matter right now, but Dr. Whittaker seems to think we're there. Maybe places like Microsoft are there? Maybe "Tester" as a role makes less sense as more of that role is automated away, and maybe when you throw enough developers to create the automation, you can have most of your testing automated?

So then there is another question that comes to mind, if executing a "automated test" is checking, while the human is writing the algorithm to do the 'checking', is that testing? In speaking with Bach, it is not in his mind. My brain, thinking about the problem, writing code, creating models in my head about the system under 'test' (system under check?) is not testing according to Bach. Very interesting viewpoint, making my claim of about this site being 98% about testing untrue in Bach's viewpoint. Dr. Whittaker would seem to say that of course that is testing, even if I personally shouldn't be doing it or at least I should also be writing production code.

To be fair, Dr. Whittaker also argues that users are better than machines or testers. This is a point both Whittaker and Bach might agree on, even if Bach might say only in some context. But, in saying that I think Dr. Whittaker loses the point that users of a product can change products quickly and are fickle. The mighty IE 6 failed and clearly IE is no longer top dog. Listening to users might have been part of that, but an insider advocating for the users also might have been helpful! Users often don't know what they want and what they want changes quickly. This has known this for years. What makes Dr. Whittaker think that all products have a single user base that only wants one set of features? One man's bug is another man's feature. For that matter, what about products that don't have a exploitable user base? How do you dog food software for shipping products (OMS) when it costs money every minute the software is broken? How about we dog food a pace maker; that will work, right? James Bach would note that quality is not a thing you can build into software but rather a sort of relationship. I suspect that if you break the software too often, you harm the user's interest in that software and they will move on.

What can I see from here?

I very much appreciate that these giants of the industry allow me to stand on their shoulders and look out upon the sea of possibilities. I don't know exactly where I stand in an 'official' sense, but I am sure that both of them hold good ideas, ideas that should be investigated... nay, should be tested. To his credit, James Bach agrees and wants to continue to make his ideas better. The problem is that with different context there are different needs. I have pondered from time to time if we could lock down context (e.g. E-Commerce website, X-Y income, X-Y products sold, Z types of browsers), could we say something about which style of testing would make more sense? In considering both arguments, I have to say that in my experience, automation can go beyond what a human can test, but the value provided often is different than that provided by manual testing. On the other hand, often the results require human analysis, meaning that human judgment is still required. Like a load test that knocks over a system after throwing 500% of normal load, a human is required to do the analysis and render a value judgment. I having also seen some development shops that have gone without testers. At least in one case, they seemed to have almost pulled it off in a superficial view, but once you dig in, I think it became clear that some people or places do need testers. I don't think there is a way of giving detailed advice that is universal, and without some well controlled studies, it maybe impossible to tell when a particular methodology is superior in a given context.

While I can't speak to what method is the best or which are superior to others, I can speak to what worked and what didn't for me in my context. Using only my own contexts and what I have seen I can make a few broad statements. Few people do automation well. The most successful automation does not involve a UI or when it does, it is in a manumated process. Those who do automation well are treasures. Few, but more people do manual testing well. These people are also treasures. If you have only bench testing by the developers, adding either automated or manual testing (or checking) is likely to increase the quality for the product in small to medium size organizations. I am not sure how to logically organize test/development for large organizations (more than 500 people in software development).

Not everything is easy to be tested by code. Not everything is easy to test by people. If you can't get people to do the testing, either it might not matter enough or you should automate it and accept the limits of checking. If your automation keeps breaking either your software is flaky or perhaps it shouldn't be automating (yet, if ever). Testing is hard and you need people who understand testing, be they developers or testers. For that matter, you also need people to understand software development, the business, the customers and probably the accounting. How you label these people may affect how they do the job, and maybe it matters, but what matters most is that the job is done as much as it needs to be done.

Ultimately, software development is hard. Dr. Whittaker and James Bach come at the problems from very different places, but both want to improve the quality of software. Even with their extremely different views both have contributed towards their goals. These extremes do cause some polarization and sadly this post might sound negative towards both their views, but it is hard to find common ground between two extreme views. On the other hand, Whittaker helped make Visual Studio 2008 and 2010 better at testing while Bach has been working on perfecting RST! Both these men have worked towards their visions and have created useful artifacts from their near disparate visions. While I have disagreements with both sides, I am glad they were around making my job of testing better and easier.

So if I haven't said it yet, thank you.

One last thing. If Dr. James Whittaker or James Bach respond to this with any corrections, I will update this post. I maybe challenging my understanding of their ideas but I am not trying to put words in their mouths.

Tuesday, November 4, 2014

Consultant's War

My co-contributor Isaac has been pondering the fact that we see more consultants blogging regularly, speaking their mind and controlling the things we talk about. I don't care if it is Dorathy "Dot" Graham, Markus Gärtner, Matt Heusser, Michael Bolton, Michael Larsen (Correction: As Matt pointed out, I got my facts mixed up and Micheal is not a consultant), Karen Nicole Johnson, James Bach or Rex Black. There are dozens of consultants I could have listed (I mean, how could I have missed Doug Hoffman!), some of whom I have personally worked with. So this is not meant to be a personal attack on any of them. I realize I list more CDT folk than others, and while that is not intentional, I read less of the thought leaders from the other schools. If you look, I bet most of the people you read either are or were consultants [In interest of full disclosure, I have written a hand full of articles and have been paid, but I am not nor have I ever been a consultant, and with you reading this, it is at least one counter-argument, but we'll get to that in a bit.]. If you made it here, there is little doubt you've read at least of those author's works at least once. They are stars! I mean that literally. I found this image of James Bach just by searching his name:

I didn't even use image search to find that, it came up on the first main page of my Google search results! I respect James, he writes well and has interesting ideas, but I have no opinion on his actual testing skills, as using his own measure, I have not seen him perform. Keeping that in mind, I have not seen any of the above consultants do any extended performances in testing, even if I have listened to many of them talk and gained insights from them. They know how to communicate and they are often awesome writers. Much better than I am. They all are senior in the sense that they have done testing for years. Some of them disagree with others, making it often a judgment call of their written works on who to listen to. Some of it is the author's voice. In my opinion, Karen Johnson is a much softer and gentler voice than James Bach. But some of it is factual. I have documented several debates between Rex Black and various other people I have listed. Rex has substantially different ideas on how the world should work regarding testing.

James Christie, another consultant brought up a standards in CAST 2014. It was a good talk and clearly he had some valid concerns about the standards that have been created. I have talked about that earlier (I am not going to include as many 'justifying' links as all my points and links are in the earlier article). I am actually not terribly interested in the arguments and in reality, ISO 29119 is more of a placeholder than the actual point of interest. I am more interested in discussing who is creating these debates. In part we are in a war between consultants and in part we are in a war of 'bed making'. Clearly the loudest voices are from those who have free time and have money at stake. The pro-ISO side has take the stance of doing as little communication as possible, because it is hard to have a debate when one side won't talk. It is a tactical choice, and it is very difficult to compromise when one side doesn't speak. Particularly when the standard is already in place, the pro-standards side doesn't have much to gain from the messy-bed side who wants the standard withdrawn.

Both groups of consultants have money to gain from it. A defacto-RST-standard would make Bach a richer man that he already is. Matt Heusser's LST does give at least some competition of ideas, but still that isn't much more diversity. The standards would probably make the pro-standards consultants more money as they can say, "as the creator of the standard I know how to implement it." Even if they made no more money in so far as making the standard, they will be better known for having made the standard. Even if I assume that no one was doing this out of self-interest, the people best represented are the consultants and to a lesser degree, the academics, not the practitioners who may feel the most impact. Those leading the charge both for and against the standard are primarily consultants or recent ex-consultants. Clearly this is a war for which the people with the most time are waging, and those are the consultants. Ask yourself, of those you have heard debate the issue, what percentage are just working for a company?

Granted, I am not a consultant, but it takes a LOT of effort to write these posts. It isn't marketing for me, except possibly for future job hunting, and the hope that I will help other people in the profession. I know non-consultants are talking about it, but we don't have a lot of champions who aren't consultants. Maybe most senior level testers become consultants, perhaps due to disillusionment of testing at their companies. Maybe that is why consultants fight so bitterly hard for and against things like standards. Perhaps my assertion that money at the table is a part of it is just idle speculation not really fit for print. I can honestly believe it to be that is possibly the large majority of the consultants involved.

Then what is it that causes these differences of view? Well let me go back to the bed making. To quote Steve Yegge:

For what it is worth, I tend to be against bed making, I think having all this formal work and making checklists is rather pointless unless they fit what I am doing. Having one checklist to rule them all with a disclaimer that YMMV and do the bits you want doesn’t sound much like a standard, but those whom like their bed nice and neat probably feel very happy when they come home at night. The ‘truth’ about the value of making your bed, the evidence that it is better is less than clear. Maybe one day we will have solid evidence in a particular area that a particular method is better than another, but we aren’t there yet in my opinion. But still I don’t make my bed. In case you are wondering, I think this is probably one of the hardest questions we have in the industry: What methods work best given a particular context and what parts of the context matter most?

|

| From: http://qainsight.net/2008/04/08/james-bach-the-qa-hero/ |

ISO 29119

James Christie, another consultant brought up a standards in CAST 2014. It was a good talk and clearly he had some valid concerns about the standards that have been created. I have talked about that earlier (I am not going to include as many 'justifying' links as all my points and links are in the earlier article). I am actually not terribly interested in the arguments and in reality, ISO 29119 is more of a placeholder than the actual point of interest. I am more interested in discussing who is creating these debates. In part we are in a war between consultants and in part we are in a war of 'bed making'. Clearly the loudest voices are from those who have free time and have money at stake. The pro-ISO side has take the stance of doing as little communication as possible, because it is hard to have a debate when one side won't talk. It is a tactical choice, and it is very difficult to compromise when one side doesn't speak. Particularly when the standard is already in place, the pro-standards side doesn't have much to gain from the messy-bed side who wants the standard withdrawn.

Both groups of consultants have money to gain from it. A defacto-RST-standard would make Bach a richer man that he already is. Matt Heusser's LST does give at least some competition of ideas, but still that isn't much more diversity. The standards would probably make the pro-standards consultants more money as they can say, "as the creator of the standard I know how to implement it." Even if they made no more money in so far as making the standard, they will be better known for having made the standard. Even if I assume that no one was doing this out of self-interest, the people best represented are the consultants and to a lesser degree, the academics, not the practitioners who may feel the most impact. Those leading the charge both for and against the standard are primarily consultants or recent ex-consultants. Clearly this is a war for which the people with the most time are waging, and those are the consultants. Ask yourself, of those you have heard debate the issue, what percentage are just working for a company?

Granted, I am not a consultant, but it takes a LOT of effort to write these posts. It isn't marketing for me, except possibly for future job hunting, and the hope that I will help other people in the profession. I know non-consultants are talking about it, but we don't have a lot of champions who aren't consultants. Maybe most senior level testers become consultants, perhaps due to disillusionment of testing at their companies. Maybe that is why consultants fight so bitterly hard for and against things like standards. Perhaps my assertion that money at the table is a part of it is just idle speculation not really fit for print. I can honestly believe it to be that is possibly the large majority of the consultants involved.

Bed: Do you make yours?

Then what is it that causes these differences of view? Well let me go back to the bed making. To quote Steve Yegge:

I had a high school English teacher who, on the last day of the semester, much to everyone's surprise, claimed she should tell just by looking at each of us whether we're a slob or a neat-freak. She also claimed (and I think I agree with this) that the dividing line between slob and neat-freak, the sure-fire indicator, is whether you make your bed each morning. Then she pointed at each each of us in turn and announced "slob" or "neat-freak". Everyone agreed she had us pegged. (And most of us were slobs. Making beds is for chumps.)That seems like a pretty good heuristic and I think it is also a good analogy. Often those who want more documentation, with things well understood before starting the work and more feeling of being in control via documentation are those who feel standards are a good idea. They want their metaphorical beds made. They like having lots of details written down, they like check lists and would rather make the bed than have a ‘mess’ left all day. A nice neat made bed feels good to them. Then there are the people who see that neat bed and think it is a waste of time at best. At worst, they think that someone will start making them make their bed too. Personally, I think "Making beds is for chumps", just like Steve Yegge. Jeff Atwood would go even further and call it a religious viewpoint:

But software development is, and has always been, a religion. We band together into groups of people who believe the same things, with very little basis for proving any of those beliefs. Java versus .NET. Microsoft versus Google. Static languages versus Dynamic languages. We may kid ourselves into believing we're "computer scientists", but when was the last time you used a hypothesis and a control to prove anything? We're too busy solving customer problems in the chosen tool, unbeliever!Clearly the consultants, many of whom I take valuable bits of data from care about what they do and they tried to lock down their empirical knowledge into demonstrable truths. But that doesn't mean they have 'the truth'. I know as testers we try to have controls, but I am not so convinced we have testing down to a science. It is why I feel we aren't ready for standards, but I also recognize the limits of my own knowledge.

For what it is worth, I tend to be against bed making, I think having all this formal work and making checklists is rather pointless unless they fit what I am doing. Having one checklist to rule them all with a disclaimer that YMMV and do the bits you want doesn’t sound much like a standard, but those whom like their bed nice and neat probably feel very happy when they come home at night. The ‘truth’ about the value of making your bed, the evidence that it is better is less than clear. Maybe one day we will have solid evidence in a particular area that a particular method is better than another, but we aren’t there yet in my opinion. But still I don’t make my bed. In case you are wondering, I think this is probably one of the hardest questions we have in the industry: What methods work best given a particular context and what parts of the context matter most?

Subscribe to:

Posts (Atom)